Former Meta Engineer: ‘We Can’t Trust Them with Our Children’

In the fall of 2021, Facebook whistleblower Frances Haugen testified before the Senate about the harms Facebook and Instagram were doing to children. That same day, Arturo Béjar, a contractor who served as an engineering director at the social media firm from 2009-2015 alerted CEO Mark Zuckerberg to the same dangers, TIME Magazine reports.

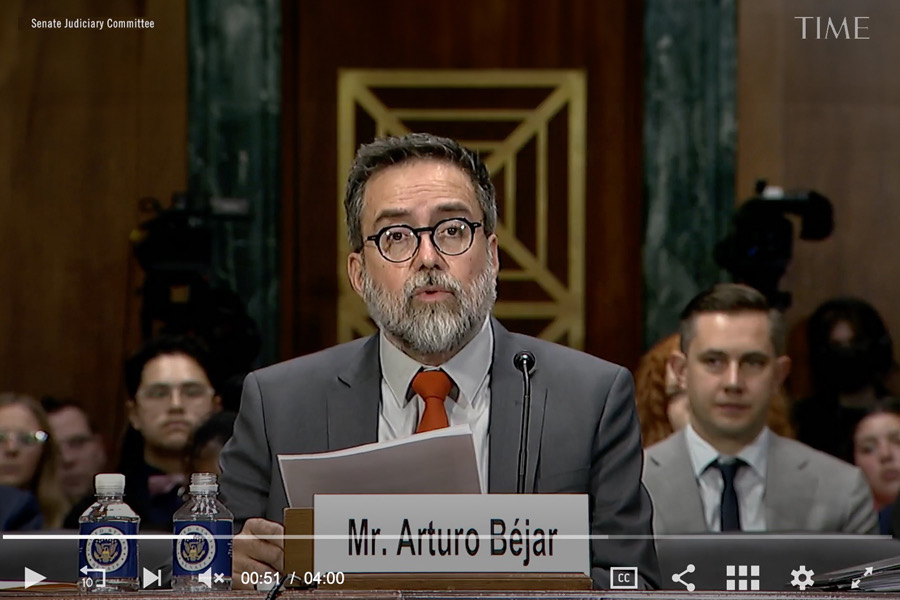

On Tuesday, Béjar testified about the practices of the social media giant to a subcommitte of the Senate Judiciary Committee. “I can safely say that Meta’s executives knew the harm that teenagers were experiencing, that there were things that they could do that are very doable and that they chose not to do them,” Béjar said to The Associated Press. “We can’t trust them with our children.”

Béjar specifically focused on the “critical gap” between how the company approaches harm and the way young people experience it on its platforms. Béjar would like to see greater policing of unwelcome content – harassment and repeated, unwelcome sexual advances, among other bad experiences – that do not explicitly violate current policies. Surveys show that 13% of Instagram users ages 13-15 – not only minors but very young teens – reported receiving unwelcome sexual advances online in the past week. Teens should have a way to tell the company they do not want to receive such messages, even if those messages do not cross the line into platform-violating content, Béjar asserts.

“You heard the company talk about it: ‘Oh, this is really complicated,’” Béjar told the AP. “No it isn’t. Just give the teen a chance to say ‘this content is not for me’ and then use that information to train all of the other systems and get feedback that makes it better.”

Meta (the new name for Facebook and Instagram’s parent company) points to its content distribution guidelines and “30 tools to support teens and families in having safe, positive experiences online” as evidence they are attacking the problem. Under the guidelines, “problematic or low-quality content” – such as clickbait, suggestive photographs, borderline hate speech or gory images – already receive reduced distribution on users’ feeds. Notably, the guidelines reduce but do not prevent the flow of such content to users.

Béjar criticized those tools as performative and wholly insufficient. When in 2019, Béjar returned as a consultant with Instagram’s wellbeing team, he discovered that many of the tools to protect teens he had helped develop during his prior six years with the company had been removed. Not only that, but instead of listening to the voices of teens, “The company wanted to focus on enforcing its own narrowly defined policies regardless of whether that approach reduced the harm that teens were experiencing.”

“I observed new features being developed in response to public outcry – which were in reality, kind of a placebo, a safety feature in name only – to placate the press and regulators,” he testified.

“Meta, which owns Instagram, is a company where all work is driven by data. But it is unwilling to be transparent about data regarding the harm that kids experience and unwilling to reduce them,” he added. He urged bipartisan support for regulation requiring social media companies to protect children from harm.

“Social media companies must be required to become more transparent so that parents and the public can hold them accountable. Many have come to accept the false proposition that sexualized content or wanted advances, bullying, misogyny, and other harms are unavoidable evil,” he said.

“This is just not true. We don’t tolerate unwanted sexual advances against children in any other public context, and they can similarly be prevented on Facebook, Instagram, and other social media products. What is the acceptable frequency for kids to receive unwanted sexual advances? This is an urgent crisis.”

In his prepared remarks, he even offered concrete suggestions on how to craft legislation: “The most effective way to regulate social media companies is to require them to develop metrics that will allow both the company and outsiders to evaluate and track instances of harm, as experienced by users. This plays to the strengths of what these companies can do, because data for them is everything.”

Meta pushed back strongly on Béjar’s allegations, citing platform changes in response to his group’s survey data, in a statement by company spokesperson Andy Stone: “Every day countless people inside and outside of Meta are working on how to help keep young people safe online. The issues raised here regarding user perception surveys highlight one part of this effort, and surveys like these have led us to create features like anonymous notifications of potentially hurtful content and comment warnings.”

Stone explained that the data Béjar describes is merely different from, and not in conflict with, Meta’s public data on unwanted content. Meta’s data tracks the number of times unwanted content is viewed rather than user perception. Stone added that the work to protect young people is ongoing: “All of this work continues.”

Read the TIME article in full and listen to excerpts from Béjar’s testimony here.

Or read the full transcript of the testimony here.

Ortutau, Barbara. “Former Meta Engineer Testifies Before Congress on Social Media and Teen Mental Health.” TIME Magazine, 7 Nov 2023, https://time.com/6332390/instagram-bejar-teen-mental-health/.

Image: TIME Magazine